Data warehouse software is at the heart of today’s data-driven world, transforming the way organizations gather, manage, and analyze massive volumes of information. Imagine a system where diverse data streams come together seamlessly, ready to be explored and understood with just a few clicks. With each innovation, these platforms have become more powerful, more flexible, and more essential for decision-makers seeking to outpace the competition.

Modern data warehouse software stands as a central hub that unifies disparate sources, ensuring that data is both accurate and accessible. From its architectural evolution and robust features to integration with business intelligence tools, these solutions shape how businesses extract value from their data. Security, compliance, and best practices are embedded at every step, making data warehouses the foundation for effective analytics and strategic growth.

Overview of Data Warehouse Software

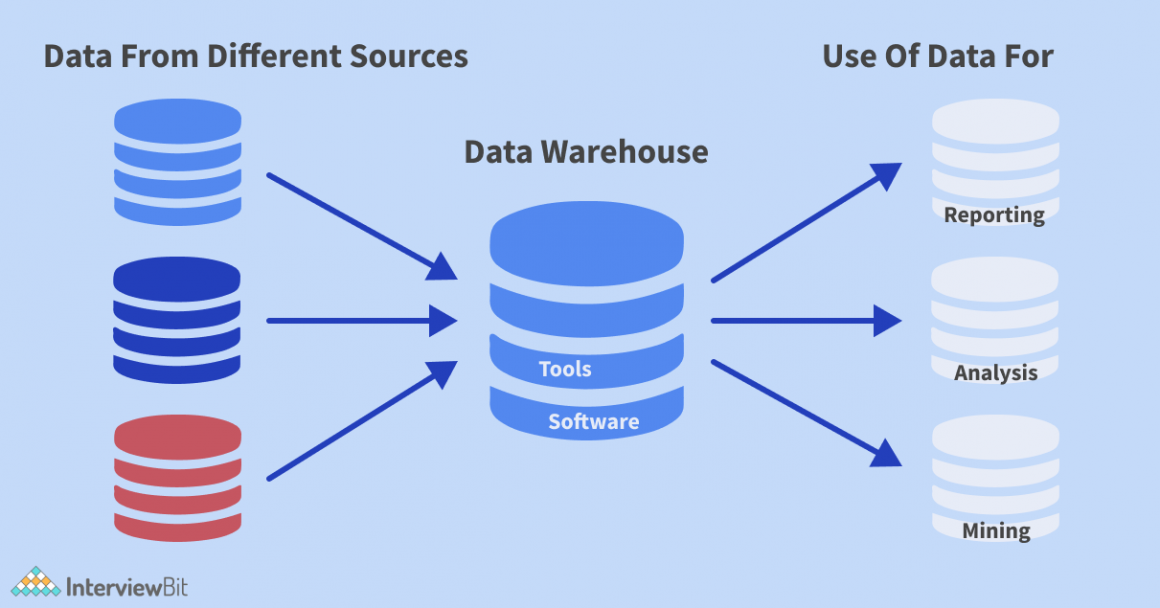

Data warehouse software plays a pivotal role in modern data management strategies by enabling organizations to consolidate and analyze large volumes of data from disparate sources. Its core function allows businesses to transform raw data into actionable insights, supporting informed decision-making processes.

The primary purpose of data warehouse software is to centralize data storage, streamline reporting, and facilitate advanced analytics. Initially, data warehousing began as on-premises systems designed to aggregate transactional data for historical analysis. Over time, this evolved into more sophisticated, cloud-based, and hybrid solutions capable of handling diverse data formats and real-time processing.

Evolution and Architecture of Data Warehouse Solutions

Data warehouse solutions have progressed from monolithic, hardware-bound environments to flexible, scalable systems that leverage the power of distributed computing. Early architectures were characterized by rigid ETL pipelines and batch processing. Today, modern platforms offer elasticity, support for semi-structured data, and integration with machine learning tools.

A typical data warehouse architecture consists of several layers:

- Data Source Layer: Operational databases, external feeds, and application-generated data.

- Staging Layer: Raw data is collected, cleansed, and formatted for further processing.

- Data Storage Layer: Structured databases or data lakes where processed data is stored.

- Presentation Layer: Interfaces for querying, reporting, and analytics.

The architecture can be visualized as a multi-tiered pipeline, where data flows from sources to a staging area, is transformed and loaded into storage, and finally accessed by business intelligence or analytics applications.

Core Features of Data Warehouse Software

Modern data warehouse software distinguishes itself through a suite of features that address the demands of contemporary data-driven enterprises. These features are essential for ensuring high performance, scalability, robust security, and seamless data integration.

Essential Features and Their Benefits

The following table Artikels the must-have features of leading data warehouse solutions, along with their descriptions, benefits, and examples:

| Feature | Description | Benefit | Example |

|---|---|---|---|

| Data Integration | Aggregating data from multiple sources into a unified repository. | Simplifies analysis and ensures consistency. | ETL/ELT pipelines, connectors. |

| Scalability | Ability to handle growing data volumes and user demands. | Supports business growth and fluctuating workloads. | Elastic compute/storage, autoscaling. |

| Security | Comprehensive controls to protect data from unauthorized access. | Addresses compliance needs and builds trust. | Encryption, RBAC, auditing. |

| Data Governance | Policies and tools to ensure data quality, integrity, and compliance. | Improves reliability and meets regulatory requirements. | Data catalogs, metadata management. |

| Performance Optimization | Techniques to enhance query speed and resource utilization. | Delivers fast insights, reduces costs. | Indexing, partitioning, caching. |

Data Integration, Scalability, and Security

Seamless data integration allows organizations to bring together data from operational systems, third-party sources, and cloud apps. This is achieved through standardized APIs, connectors, and robust ETL/ELT processes. Scalability is a defining characteristic, with platforms offering dynamic resource allocation to meet varying analytical workloads. Security remains paramount, incorporating encryption at rest and in transit, granular access controls, and continuous monitoring.

Enforcement of Data Governance

Data governance within data warehouse software is maintained through layered access policies, data lineage tracking, and automated data quality checks. Metadata catalogs help users understand data origins and transformations, while auditing features ensure compliance and traceability. These controls enable organizations to maintain trust in their data and adhere to industry regulations.

Types of Data Warehouse Architectures

Choosing an appropriate data warehouse architecture is crucial for aligning with business needs, data volumes, and operational models. The evolution of data warehousing has led to a range of architectures, each with unique advantages and trade-offs.

Comparison of Common Architectures

The table below contrasts traditional, cloud-based, and hybrid data warehouse architectures:

| Architecture Type | Description | Advantages | Challenges |

|---|---|---|---|

| Traditional (On-premises) | Physical hardware managed on-site, with in-house maintenance. | High control, compliance, and data residency. | High upfront costs, limited scalability, ongoing maintenance. |

| Cloud-based | Hosted in public or private clouds, resources provisioned on demand. | Elastic scalability, low upfront investment, managed services. | Data security concerns, potential vendor lock-in. |

| Hybrid | Combines on-premises and cloud environments for flexibility. | Balanced control and scalability, gradual cloud migration. | Integration complexity, potential data latency. |

Flow of Data in Different Architectures

In a traditional architecture, data moves from operational databases into a staging area via batch ETL processes, then into the warehouse for storage and analytics. In cloud-based models, data flows through cloud-native ETL/ELT services, with storage and compute separated for high efficiency. Hybrid architectures integrate both, allowing certain data to remain on-premises for regulatory reasons, while the bulk of analytics workloads are offloaded to the cloud.

A typical data pipeline in each architecture:

- Traditional: Source systems → ETL server → On-premises data warehouse → Reporting/BI tools

- Cloud-based: Cloud data sources → Cloud ETL services → Cloud data warehouse storage → Cloud BI tools

- Hybrid: On-premises and cloud sources → Hybrid ETL orchestration → Distributed storage (on-prem and cloud) → Unified analytics layer

Leading Data Warehouse Software Examples

The current data warehouse landscape features several prominent software platforms, each offering robust capabilities and specialized features. Selecting the right platform depends on factors such as scale, performance, integration needs, and cost.

Popular Software Platforms and Key Differentiators

Innovation and market demand have driven the development of many data warehouse platforms. Key examples include:

- Amazon Redshift: Highly scalable, integrates seamlessly with AWS ecosystem, and supports complex analytical queries at petabyte scale.

- Snowflake: Cloud-native, offers instant scalability, separation of compute and storage, and supports multi-cloud deployments.

- Google BigQuery: Serverless architecture, real-time analytics, and deep integration with Google Cloud Platform services.

- Microsoft Azure Synapse Analytics: Combines data warehousing, big data analytics, and data integration in a single platform, tightly integrated with Azure services.

Use Cases for Leading Platforms

Understanding the strengths of these solutions helps match platform capabilities to specific business needs. For instance, Amazon Redshift is favored for large-scale analytics in retail and finance, while Snowflake’s flexibility appeals to SaaS providers and organizations with diverse cloud strategies. Google BigQuery’s serverless model is ideal for real-time data science applications, and Azure Synapse excels in industries leveraging Microsoft’s ecosystem.

Summary Table: Pricing Models, Scalability, and Integrations

The table below summarizes key considerations for each platform:

| Platform | Pricing Model | Scalability | Supported Integrations |

|---|---|---|---|

| Amazon Redshift | Pay-as-you-go, reserved instances | Petabyte scale, elastic clusters | AWS services, JDBC/ODBC, third-party ETL tools |

| Snowflake | Consumption-based (per second of compute) | Automatic scaling, multi-cluster | AWS, Azure, GCP, Snowpipe, major BI/ETL tools |

| Google BigQuery | On-demand (per query), flat-rate | Serverless, virtually unlimited | Google Cloud services, Dataflow, Looker, API access |

| Azure Synapse Analytics | Pay-per-use, reserved capacity | Elastic pools, on-demand provisioning | Azure Data Factory, Power BI, third-party connectors |

Implementation Procedures and Best Practices

Implementing data warehouse software involves methodical planning and execution to ensure system stability, data accuracy, and alignment with business objectives. A disciplined approach helps minimize risk and optimizes long-term value.

Step-by-Step Implementation Procedures

A typical implementation journey includes several key milestones:

- Requirements Gathering: Define business goals, data sources, and reporting needs.

- Solution Design: Architect data models, select integration methods, and plan storage.

- Infrastructure Setup: Provision hardware or cloud resources, configure environments.

- ETL/ELT Development: Build pipelines for data extraction, transformation, and loading.

- Data Migration: Migrate legacy data, validate and reconcile records.

- Testing: Conduct unit, integration, and user acceptance testing to verify functionality.

- Deployment: Launch the solution, monitor performance, and provide user training.

- Ongoing Maintenance: Implement monitoring, patching, and continuous improvement processes.

Best Practices for Migration from Legacy Systems

Transitioning from legacy data warehouses to modern platforms demands careful coordination. Successful migrations prioritize data quality, minimize downtime, and maintain historical continuity. Employing phased migration strategies allows for incremental validation and rollback options.

Checklist for Successful Deployment and Maintenance

The following checklist covers essential aspects for a smooth deployment:

- Establish clear business objectives and success metrics.

- Engage stakeholders across IT and business units early.

- Document data lineage, transformation rules, and source mappings.

- Implement robust data validation and error handling mechanisms.

- Set up automated monitoring and alerting for key system metrics.

- Provide comprehensive user training and support documentation.

- Schedule periodic reviews for performance optimization and governance updates.

Methods for Data Loading and Transformation

Efficiently loading and transforming data is fundamental to maximizing the accuracy and value of a data warehouse. The choice between ETL and ELT processes is influenced by data volumes, platform capabilities, and the complexity of transformations required.

ETL and ELT Processes Explained

ETL (Extract, Transform, Load) involves extracting data from sources, transforming it into the desired format, and then loading it into the data warehouse. ELT (Extract, Load, Transform) reverses the last two steps, loading raw data first and performing transformations within the warehouse using its native processing power.

ETL is preferred when data must be cleansed and standardized before storage, especially in systems with strict data quality requirements. ELT is increasingly popular in cloud platforms, where scalable compute resources enable rapid, in-warehouse transformations.

Table: Tools and Techniques for Data Extraction, Transformation, and Loading

| Step | Tools/Techniques | Best Use Case |

|---|---|---|

| Extraction | APIs, connectors, data replication tools | Aggregating data from diverse, real-time or batch sources |

| Transformation | Data wrangling tools, SQL scripting, cloud-native processing (e.g., dbt) | Complex calculations, data quality enforcement, enrichment |

| Loading | Bulk loaders, streaming ingestion tools (e.g., Kafka, AWS Kinesis) | High-volume or real-time data delivery to storage |

Real-Time Data Ingestion in Modern Platforms

Real-time data ingestion is achieved through streaming technologies that capture and process data events as they occur. Leading solutions utilize message brokers and cloud-native event streaming platforms to ensure that dashboards and analytics reflect the latest available information, supporting time-sensitive business decisions.

Integration with Business Intelligence Tools

Integration with business intelligence (BI) tools transforms the raw potential of data warehouses into actionable insights for business users. This synergy enables organizations to visualize trends, monitor key metrics, and empower data-driven strategies.

Connecting Data Warehouse Software with BI Platforms

Most data warehouse platforms offer standardized interfaces, such as ODBC, JDBC, and RESTful APIs, allowing seamless connections with a broad range of BI tools. This integration ensures that data is available in near real-time for dashboards, reports, and ad hoc analysis.

Process of Data Visualization and Analysis, Data warehouse software

The process typically involves:

- Establishing a secure connection between the BI tool and the data warehouse.

- Configuring user authentication and data access permissions.

- Importing and modeling data sets to align with analytical requirements.

- Designing interactive dashboards and custom reports.

- Sharing insights with stakeholders through collaboration features.

Popular BI Tools Supported by Leading Data Warehouse Solutions

Many data warehouse platforms natively support integrations with industry-leading BI tools, including:

- Tableau

- Power BI

- Looker

- Qlik Sense

- MicroStrategy

- Google Data Studio

Security and Compliance in Data Warehouse Solutions

Robust security and compliance frameworks are integral to any data warehouse deployment, ensuring sensitive data remains protected and regulatory obligations are met. Platforms employ multilayered strategies to guard against threats and maintain data integrity.

Key Security Mechanisms

Encryption is commonly applied both in transit and at rest, safeguarding data from interception or unauthorized access. Role-Based Access Control (RBAC) and fine-grained permissions ensure only authorized users can view or manipulate data. Auditing functionalities record access and modifications, providing traceability for compliance and incident response.

Common Compliance Standards Addressed

Data warehouse software typically adheres to international and industry-specific compliance standards, such as:

- GDPR (General Data Protection Regulation)

- HIPAA (Health Insurance Portability and Accountability Act)

- SOC 2 (System and Organization Controls)

- PCI DSS (Payment Card Industry Data Security Standard)

These frameworks are addressed through a combination of technical controls and documented processes.

A secure data management workflow in a data warehouse environment involves encrypted data transfers, strict role-based access assignments, continuous monitoring of user activities, and automated alerts for anomalous behavior. Compliance is maintained through data masking, retention policies, and regular audits, ensuring data privacy and integrity at every stage of the information lifecycle.

Summary

In summary, data warehouse software is more than just a storage solution—it’s a catalyst for smarter decisions and future-ready analytics. As technology continues to evolve, these platforms will only become more integral, empowering organizations to harness data with unprecedented speed, security, and intelligence. Embracing the latest advancements ensures that your business not only keeps up but thrives in the data revolution.

Quick FAQs

What is data warehouse software used for?

Data warehouse software is used to consolidate, store, and manage large volumes of data from multiple sources, enabling advanced analytics and business intelligence.

How is a data warehouse different from a database?

A data warehouse is designed for analytical processing and reporting across historical data, whereas a database is optimized for transaction processing and day-to-day operations.

Can small businesses benefit from data warehouse software?

Yes, many modern and cloud-based data warehouse solutions are scalable and cost-effective, making them accessible and valuable for businesses of all sizes.

How often is data updated in a data warehouse?

Data updates can vary from real-time to scheduled batch loads, depending on the organization’s needs and the architecture of the data warehouse.

Is specialized IT knowledge required to use data warehouse software?

While technical expertise is helpful for setup and advanced features, many platforms offer user-friendly interfaces and integration with popular BI tools to simplify usage for non-technical users.